How to create AI images on your own old PC

with just a 4 gb graphics card!

AI generated image of 22 year old woman, room, landscape

In 2022, AI image creation was for expensive PC's only, but things changed.

I have a 10 year old PC and upgraded it with a $200 graphics card (Palit GTX 1650). And yes, it can render AI images like the one above! I have a 10 year old PC, with a power supply with only 6-pin connectors. That limits me to a handful of possible cards. I chose a $200 graphics card, a Palit GTX 1650, which is silent during desktop use, and still nearly silent when rendering. And it can render AI images like the one above!

Stable Diffusion WebUI setup, step by step

You are reading in mobile mode. On a desktop browser, instructions will be more detailed.

- Install Python 3.10. It must be exactly version 3.10, not a newer one. I downloaded 3.10.10 for Windows (python-3.10.10-amd64.exe) from here. When asked if to extend a filename max length limit of the system, I said no, as I don't think that is needed.

- Install Git. The latest version at that time (2.40.0) worked fine for me. The latest version at that time (Git-2.40.0-64-bit.exe) worked fine for me. It asks many questions during installations, just use defaults everywhere. If asked if to extend the cmd.exe PATH variable, say yes.

- Open the CMD.EXE command line.

Type for a test:

py- you should get an output "Python 3.10.10". typequit()or press CTRL+Z to exit the python prompt.git- you should get the Git help text.

C:\Windows, and git.exe inC:\Program Files\Git\cmd. - Create and enter a work folder:

C:

cd \

mkdir sd

cd sd - download stable diffusion webui:

git clone https://github.com/(type all in one line)

AUTOMATIC1111/

stable-diffusion-webui.gitgit clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git - enter the stable diffusion folder:

cd stable-diffusion-webui

and run the webui by:

webui-user.bat

on the first run, it will download gigabytes of data, which could take a while. finally it says: "Running on http://127.0.0.1:7860" - open a web browser and type:

127.0.0.1:7860

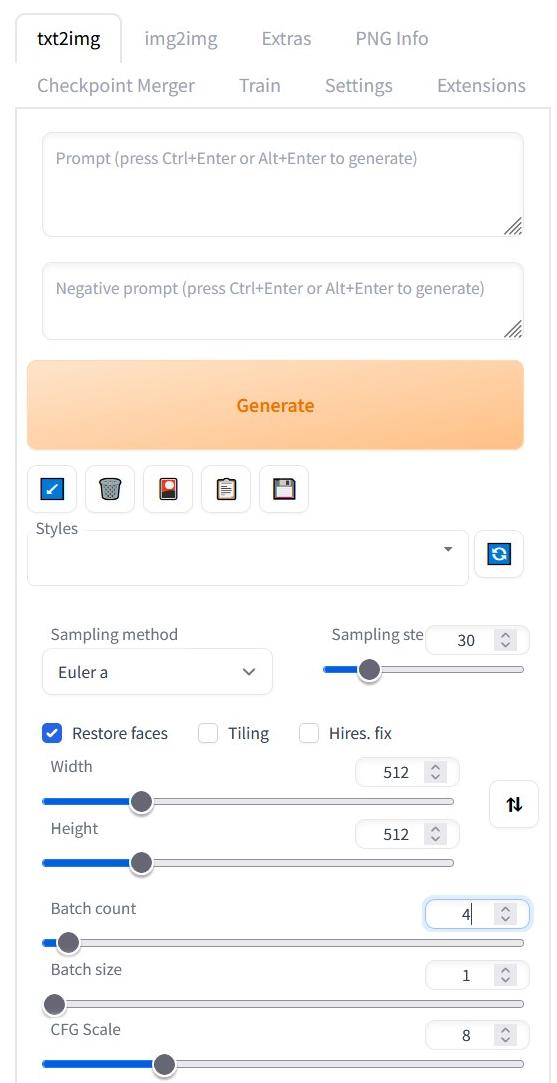

you will see the stable diffusion webui.

- create your first image:

- copy into prompt:

woman, eye level, full shot. detailed background, coast with flowers.

Eye level means we don't want to look up like an ant, and full shot that the subject should not be too close, or too far away, but filling the image. - copy into negative prompt:

deformed, bad anatomy, disfigured, poorly drawn face, mutation, mutated, extra limb, ugly, disgusting, poorly drawn hands, missing limb, floating limbs, disconnected limbs, malformed hands, blurry, ((((mutated hands and fingers)))), watermark, watermarked, censored, distorted hands, amputation, missing hands, obese, doubled face, double handsWhy the huge negative prompt? In my experience, if you write just a short one like "deformed, bad anatomy, disfigured", you get an out of memory error! so you think your graphics card is too weak, or 512x512 resolution is already too high, but no - just supply a long negative prompt, and it works! my explanation: if the AI thinks about too many ways how the image should look like, it needs more memory. so tell it to think about fewer ways. - sampling steps:

30 - restore faces: activate

- width, height:

512 - batch count:

4 - CFG Scale:

8 - Click "Generate".

With my graphics card, this takes 90 seconds per image. It will create 4 images, so I have to wait 6 minutes.

Example outputs:

They don't look very interesting yet, because the prompt was so short. You have to add prompt details for pose and environment to make it more interesting.

Especially when rendering humans, many result images can be ugly, with distorted limbs and faces. it can happen that you have to render 5 to 10 images to get a single good result. You also have to add details to the negative prompt for better results.

The rendered images have a 512x512 pixel resolution, a 4 gb card cannot handle more. ("Hires. fix" directly in webui will not help.) But AI also allows magic upscaling, where it adds details, thinking how the upscaled image should look like. I did not find the upscalers in WebUI satisfying, as the results looked too artificial. But but there is a free tool called Upscayl which produces very good results in the mode: General Photo (Ultramix Balanced).

512x512

2048x2048 upscayled - copy into prompt:

Choosing the right SD model

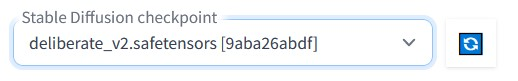

By default, Stable Diffusion WebUI uses a 4 gb file named v1-5-pruned-emaonly.ckpt, containing training data for image generation.

But the best model file in my opinion is Deliberate, available on civitai. So do these steps:

- download the 2 gb

deliberate_v2.safetensorsfrom civitai, and store it instable-diffusion-webui\models\Stable-diffusion, parallel to v1-5-pruned-emaonly.ckpt. - go into the WebUI, click the blue refresh button,

and select the file.

Loading may take a minute. It may take a minute until the file is loaded.

That's it. You can continue writing prompts as before, but now the improved image data of the new model is used.

View example galleries

Useful tools

iView is a free lightweight image viewer optimized to walk through generated images. You can quickly sort out images into three different target folders, and press F3 to view the contained prompt text of an image.